Streamlining Local AI Experimentation: Discover the Power of local.ai

Introducing local.ai – an open-source desktop application that revolutionizes the way you work with AI models. This innovative tool empowers developers, researchers, students, and AI enthusiasts to effortlessly download, manage, and run state-of-the-art AI models on their local computers, without the need for GPU-powered cloud infrastructure.

Unlock the Potential of Local AI

local.ai is built using the Rust programming language, ensuring a highly efficient and native app experience across Windows, macOS, and Linux. Its intuitive interface and seamless workflow make it a game-changer in the world of AI experimentation and model integration.

Streamlined Model Management

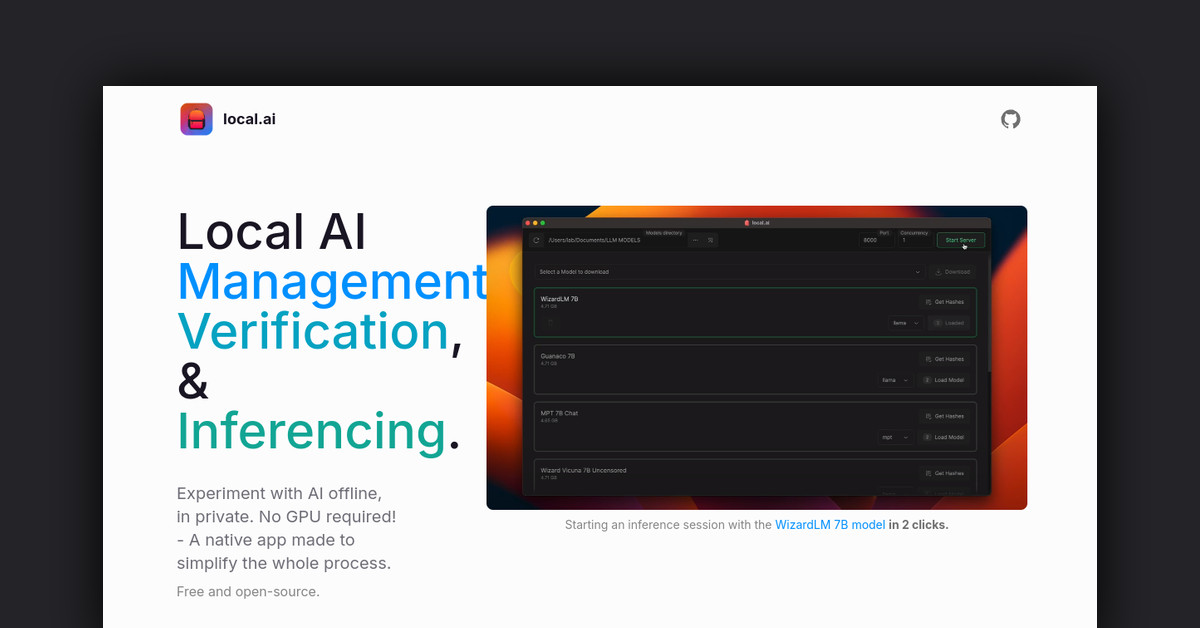

The app’s powerful Model Downloader feature allows you to search for and download AI models, such as GPT-3 and Stable Diffusion, with ease. Enjoy resumable downloads and track your models in one centralized location, thanks to the Model Manager’s usage-based sorting and directory-agnostic storage.

Robust Integrity Verification

Ensuring the integrity of your AI models is crucial, and local.ai’s Digest Verifier makes this process a breeze. Quickly validate your models against a database of known-good digests using SHA256 and BLAKE3 checksums, and access detailed model information cards with license and usage details.

Seamless Inferencing Server

With just a few clicks, the Inferencing Server feature enables you to start a local server and power your AI applications. Load your model, launch the server, and make queries without hassle. The app even writes your conversations and results to .mdx files for easy reference and integration.

Tailored for Diverse Needs

local.ai caters to a wide range of users:

Developers: Quickly test and integrate AI models into your prototypes and applications, avoiding cloud lock-in and streamlining your workflow.

Researchers: Experiment with AI capabilities offline using your own data, without the need for enterprise-grade hardware.

Students: Learn real-world AI skills and explore state-of-the-art models on your personal computer.

AI Enthusiasts: Dive into the latest AI advancements with an easy-to-use, open-source tool.

Open-Source Community Support

As an open-source project, local.ai relies on the community for support and contributions. Visit the GitHub repository to find help, discussions, and report any bugs or feature requests. Join the growing community of developers, researchers, and AI enthusiasts who are shaping the future of local AI experimentation.

Key Features at a Glance

- Powerful Native App: Built with Rust for efficiency, small install size, and CPU-optimized inferencing with support for quantization.

- Intuitive Model Management: Resumable high-speed downloading, usage-based model sorting, and directory-agnostic storage.

- Robust Digest Verification: Ensure model integrity with SHA256 and BLAKE3 checksums, and access detailed model information.

- One-Click Inferencing Server: Start a local server to power your AI applications in minutes, with customizable inference parameters and remote vocab support.

Experience the power of local AI with local.ai – your gateway to a world of seamless AI experimentation and model integration. Start your journey today and unlock the full potential of AI on your own machine.